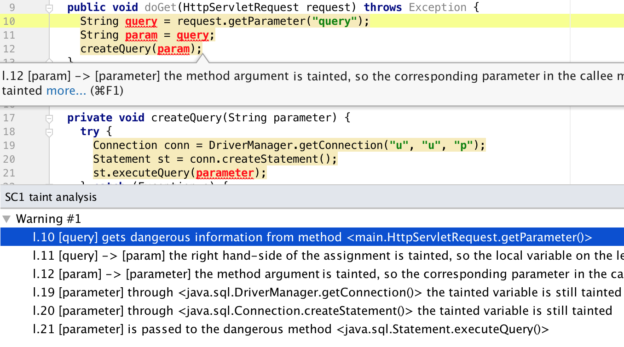

Static analysis tools perform complex reasoning to yield warnings. Explaining this reasoning to the users is a known issue for the tools. We present the concept of analysis automata and detail three applications that enhance explainability: (1) Warning understanding, (2) Warning classification, and (3) Detection of bad analysis patterns.

We present MUDARRI, an IntelliJ plugin that illustrates the first use case.

Artifacts

Publications

- TSE 2022: Explaining static analysis with rule graphs (Lisa Nguyen Quang Do, Eric Bodden).